Put a human in the middle of AI

Put a human in the middle of AI

When using any generative AI to create content, you need to put a human in the middle.

It seems everyone is excited about how to leverage generative AI to help them do their work. ChatGPT, Google's Gemini, and Microsoft's Copilot are in high demand as people look for ways to apply AI.

At the same time, keep in mind that generative AI works well for well defined areas, topics that already have a large body of writing behind them. The AI system is trained on these works, and generates new text based on the content it was trained on. As a result, generative AI does an excellent job when the topic is well understood. And for a lot of day-to-day work, that means generative AI can do the "heavy lifting" of writing ad copy, job descriptions, and other kinds of rote content. You can also use generative AI to write essays, technical descriptions, and how-to procedures if the topic has been described before.

For new and emerging topics, generative AI will be at a disadvantage because it will have little to draw on. Humans first need to write the material that AI systems will use for training.

AI can be right and wrong at the same time

One topic that has been well described is the Star Wars franchise. From the first movie in 1977 to the latest TV shows, books, and comics, there's a wealth of content about events a long time ago in a galaxy far, far away. So you'd think that generative AI would do a great job in describing events in the Star Wars universe.

Here's an example prompt I asked of ChatGPT:

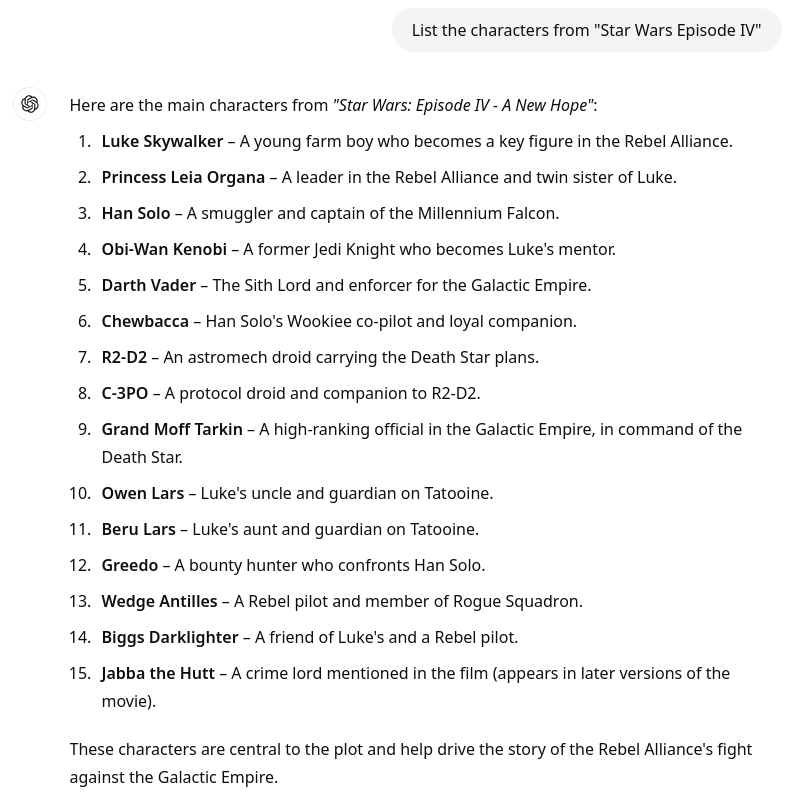

> List the characters from "Star Wars Episode IV"This is a fairly straightforward request to list the major characters from the first movie, and I use this prompt every six months or so, as a sort of "measure" of how well generative AI can respond to prompts. As in previous experiments, ChatGPT generated a list of the major characters, with a short description of each:

If you had never seen the original Star Wars (1977) and I asked you to watch the movie and list the major characters, it might be tempting to ask AI to make a list for you. This looks correct. And technically, everything is correct. But there are a few things that don’t actually appear in the original Episode IV movie:

The word "Sith" is never said on screen until Episode I (1999). Yet in this description, ChatGPT describes Darth Vader as "The Sith Lord and enforcer for the Galactic Empire."

Tarkin is only referred to as "Governor," not "Grand Moff." The "Moff" and "Grand Moff" titles were invented for the movie, and were used in spinoff media such as books and comics, but Tarkin was only referred to as "Governor" in the first movie.

Leia is not revealed as Luke's sister until Return of the Jedi (1983). But the ChatGPT response lists Princess Leia as "A leader in the Rebel Alliance and twin sister of Luke."

Wedge and Biggs are not given last names in the movie. Wedge is never named other than his callsign in any movie, and the same is true for Biggs. Their last names are provided in a variety of Expanded Universe books and games, but these are never mentioned on screen in the movies.

The response also provides extra details that were not mentioned in Episode IV, but you can see already that ChatGPT is not relying solely on the movie for its sources. It's generating this information from sources outside the movie, even though the query was specifically about Episode IV.

Put a human in the middle

When using any generative AI to create content, you need to put a human in the middle. At some point, a human needs to evaluate the AI response and fact-check it. This can be tedious for a very long or overly technical piece, and fact-checking the AI requires the same knowledge about the topic that would be needed to write the article yourself. In this light, using generative AI to wholly source new content may not be a good idea.

But generative AI has many more uses than just writing entire articles. Technical writers and technical editors can use generative AI to summarize content or to generate a list of interesting headlines. These tasks are well within the capability of generative AI, and require little effort to validate the output. Using or adapting an AI-generated headline is a low-effort task, but timesaving for an editor who needs to generate captivating headlines or titles for dozens of articles in a pipeline.

I believe generative AI is a boon to technical and professional writing, and writers who can leverage AI will outperform writers who do not use AI. But we cannot use generative AI without understanding its limitations.